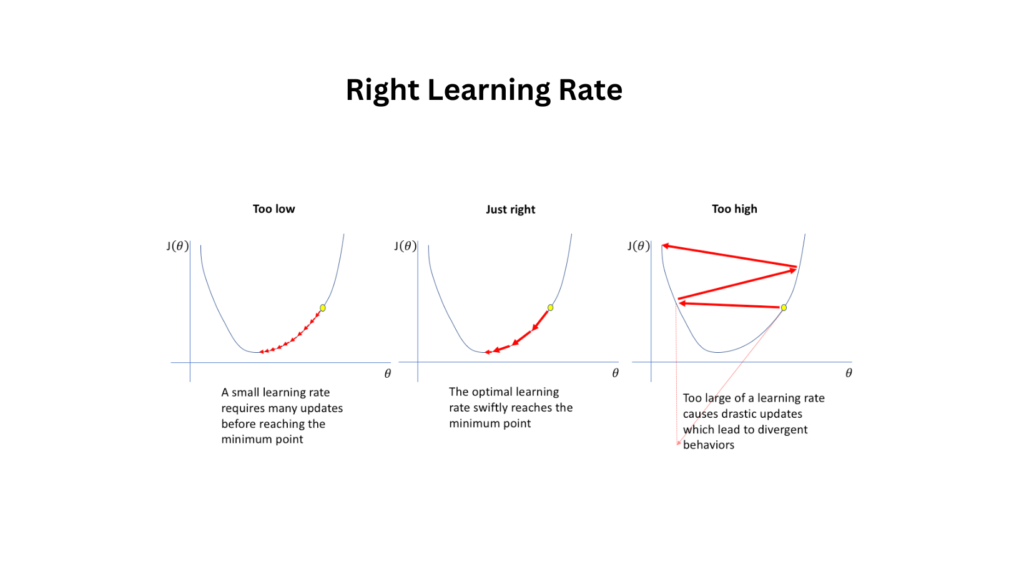

Choosing the right learning rate in deep learning is like finding the perfect balance when seasoning a dish. Too much salt? Overwhelming. Too little? Bland. Similarly, an overly high learning rate can cause your model to overshoot optimal solutions, while one that’s too low can make training feel endless.

But how do you strike that balance? Let’s break it down into simple, actionable steps.

1. What Is the Learning Rate?

It is essentially the step size your model takes when updating its weights during training. Think of it as the speed at which your model learns from data. A well-chosen learning rate ensures the model improves steadily without stumbling or dragging its feet.

2. Why Does it Matter?

Imagine hiking toward a mountain peak. If your steps are too big, you risk overshooting or even tumbling down the other side. If they’re too small, you’ll tire out before reaching the top. It plays a similar role—it determines whether your model converges to the best solution efficiently or not at all.

3. Tips for Choosing the Right Learning Rate

a. Start with a Learning Rate Range Test

- Begin with a small range(e.g., 0.001 to 1).

- Train your model for a few epochs, gradually increasing it.

- Plot the loss against the it to spot the sweet spot where the loss starts to drop consistently.

b. Use Predefined Schedules

Many optimizers like Adam or SGD with momentum come with built-in learning rate schedules. These adjust it over time to prevent overshooting.

c. Experiment with Learning Rate Annealing

- Warm-up: Start small, then increase gradually for a few epochs.

- Decay: Reduce the it as training progresses to refine the model’s accuracy.

d. Leverage Adaptive Techniques

Advanced methods like Cyclical Learning Rates or Learning Rate Finders take the guesswork out. These techniques adapt the learning rate dynamically, improving efficiency

4. Watch Out for Warning Signs

- Too High : Training loss oscillates wildly or fails to decrease.

- Too Low : Training loss decreases too slowly, wasting time and resources.

5. Personal Insight: My Own "Aha!" Moment

When I first trained a deep learning model, I spent hours tweaking hyperparameters, frustrated by inconsistent results. Then I discovered the it range test. It was a game-changer—like flipping a light switch in a dark room. I saw immediate improvements and understood how much of an impact this tiny number can have.

6. Fine-Tuning and Continuous Adjustment

Choosing the right learning rate is just the beginning. As you dive deeper into training, you may find that adjustments are necessary along the way. It’s not uncommon to tweak it during the course of training based on how the model behaves. This process is called fine-tuning.

For instance, after observing the loss curve for a few epochs, you might notice that your model is converging too slowly. In such cases, slightly increasing the it might speed things up without compromising the model’s stability. On the flip side, if your loss starts to plateau or even rise, it may be time to reduce it and allow the model to refine its weights more carefully.

7. Final Thoughts

Choosing the right learning rate isn’t magic—it’s a mix of science, trial, and intuition. Start with the basics, experiment, and don’t be afraid to make mistakes. Every misstep teaches you something valuable.

The Emotional Side of Tuning it

Okay, let’s get real for a moment. Tuning it can be frustrating. You might feel like you’re endlessly tweaking numbers, chasing elusive performance improvements. But it can also be rewarding. There’s something deeply satisfying about watching your model finally click into place after nailing the right learning rate.

So if you’re feeling stuck, remember: persistence pays off. Every experiment brings you closer to succes

Practical Tools to Make Life Easier

Don’t go it alone—these tools can help streamline the process:

- Fastai: For its intuitive learning rate finder.

- TensorBoard: To visualize loss curves and track progress.

- Optuna or Ray Tune: If you’re feeling ambitious, these platforms automate hyperparameter tuning, including learning rates.